Well they fuggin broke it.

I've never had it act more stupid than it has today. I'm literally about to cancel and try Gemini Advanced or CoPilot Pro.

Anyone got any experience with either, I'm all ears.

This is a most excellent place for technology news and articles.

Well they fuggin broke it.

I've never had it act more stupid than it has today. I'm literally about to cancel and try Gemini Advanced or CoPilot Pro.

Anyone got any experience with either, I'm all ears.

I use Copilot, but dislike it for coding. The "place a comment and Copilot will fill it in" barely works, and is mostly annoying. It works for common stuff like "// write a function to invert a string" that you'd see in demos, that are just common functions you'd otherwise copypaste from StackOverflow. But otherwise it doesn't really understand when you want to modify something. I've already turned that feature off

The chat is semi-decent, but the "it understands the entire file you have open" concept also only just works half of time, and so the other half it responds with something irrelevant because it didn't get your question based on the code / method it didn't receive.

I opted to just use the OpenAI API, and I created a slack bot that I can chat with (In a slack thread it works the same as in a "ChatGPT context window", new messages in the main window are new chat contexts) - So far that still works best for me.

You can create specific slash-commands if you like that preface questions, like "/askcsharp" in slack would preface it with something like "You are an assistant that provides C# based answers. Use var for variables, xunit and fluentassertions for tests"

If you want to be really fancy you can even just vectorize your codebase, store it in Pinecone or PGVector, and have an "entire codebase aware AI"

It takes a bit of time to custom build something, but these AIs are basically tools. And a custom build tool for your specific purpose is probably going to outperform a generic version

// write a function to invert a string

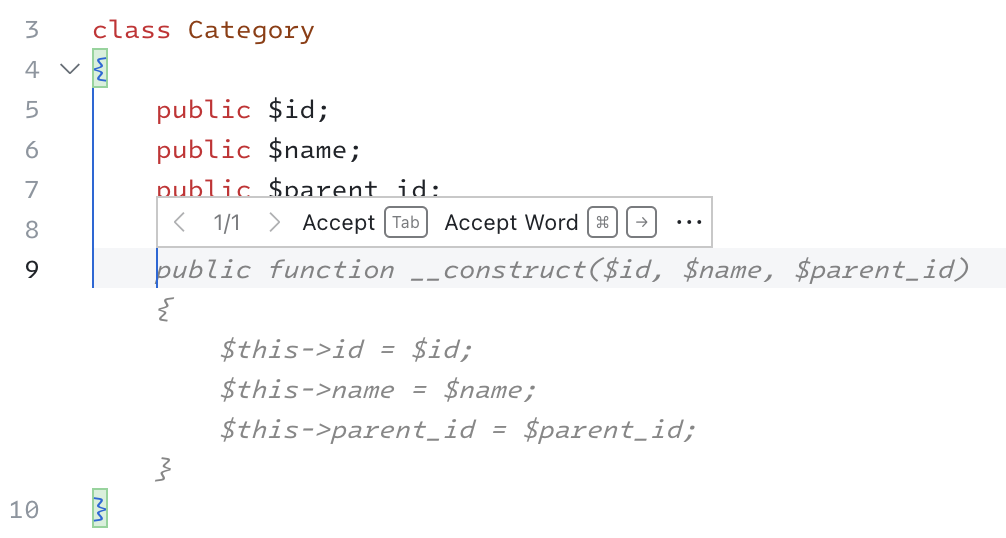

That's not how I use it at all. I mean I started out doing that, but these days it's more like this (for anyone who hasn't used copilot, the grey italic text is the auto-generated code - tab to accept, or just type over it to ignore it):

Sure - I totally could have written the constructor. But it would have taken longer, and I probably would've made a few typos. And by the way it's way better than copying from Stack Overflow, because it knows your coding style, it knows what other classes/etc exist in your project, etc etc.

It's also pretty good at refactoring. You can tell it to refactor something to use a different coding pattern for example, and it'll write 30 lines of code then show you a git style diff.

Not to mention you can ask questions about your code now. Like "how has X been implemented in this 20 year old project I started working on today?" Or one I had the other day "I'm getting this error, what files might be causing that?" It gave me a list of 15 files and I was able to find it in a few minutes. The error had no context for me to figure it out.

I second this. GH Copilot for coding is an amazing tool, not just for boilerplate, but to fill complementary logic, brainstorm test cases, rewriting and refactoring, reducing typos or "copy and paste" errors, documentation, prototyping code from a human-written description, and probably several other things at different levels of competence.

Makes me wonder what people that don't find it useful are trying to do with it. Sure you'll probably need or want to change some things, but that's miles ahead of having to write it from zero.

Hell, if you start declaring a function with a good name and good names + types for the arguments, it'll often write an implementation that is mostly correct using the rest of the file as context.

Copilot Pro?

Well I have Copilot Pro, but I was mainly talking about GitHub Copilot. I don't think having the Copilot Pro really affects Copilot performance.

I meanly use AI for programming, and (both for myself to program and inside building an AI-powered product) - So I don't really know what you intend to use AI for, but outside of the context of programming, I don't really know about their performance.

And I think Copilot Pro just gives you Copilot inside office right? And more image generations per day? I can't really say I've used that. For image generation I'm either using the OpenAI API again (DALL-E 3), or I'm using replicate (Mostly SDXL)

I mainly use it for troubleshooting stuff, which includes everything from bash to node and react, Python to ..DNS. idk.

Copilot Pro, confusingly, isn't GitHub copilot related. I do have GitHub copilot, I agree gpt4 is better in general, just not in my IDE.

Idk how Microsoft has bungled this naming... They own GitHub now right?!

So there's Microsoft Copilot, which is like bing chat for windows. But now there is Microsoft Copilot Pro for $20/mo, which uses gpt4 turbo. Haven't seen much on it.

And even more recently, Google Bard is now Gemini, but you can do Gemini Ultra for $20/mo. Supposedly trying to contend with ChatGPT 4 as well.

Idk how Microsoft has bungled this naming

You haven't followed been following Microsoft for long have you? The first version of Windows was version 3.0 (there were technically earlier versions but they were "a work in progress" and weren't really usable at all). The third version of Xbox was called "Xbox One".

I have been using Windows since before 3.0.

Your point, while comical, is kind of irrelevant as far as naming two independent products the same thing.

I've briefly used bard before they rolled it into Gemini and bard was really quick and accurate. Now that bard is Gemini they seem to have deliberately handicapped the performance of the free product as it really needs very specific prompts to get an answer that's helpful and takes twice as long as bard. Currently I use codeium (not to be confused with codium which is another ai code product) for code reviewing and a linter, and bing AI for general questions. Bing AI is quick, but I've noticed it provides the simplest and quickest answers it can provide, almost like it provides the first answer it finds and nothing else, that can be good and bad. As for copilot, I refuse to pay for it because it really is a good product and should be available to everyone that wants to learn coding, but I get it, they gotta make money I just think it's bullshit to withhold something like that.

I tried Gemini and it did some of the worst writing I’ve seen in some time. YMMV

Did you pay for advanced or are you referring to the thing everyone has access to?

Free one. I found it to be a lot worse than Chat GPT’s free tier. I didn’t test it mega in depth but I threw some of the same “rewrite this copy but make it better in <this> way” sort of tasks I’ve used GPT for and it turned around ridiculously bad writing.

I then asked it if it thought that what it had produced was good writing and comically it told me that it was bad and full of cliches and that I should avoid using it. I’m not making that up.

I'm not interested in reviews of the free tier. I'm looking to see how ChatGPT4 compares with Copilot Pro and Gemini Advanced.

This is the best summary I could come up with:

The San Francisco artificial intelligence start-up said on Tuesday that it was releasing a new version of its chatbot that would remember what users said so it could use that information in future chats.

If a user mentions a daughter, Lina, who is about to turn 5, likes the color pink and enjoys jellyfish, for example, ChatGPT can store this information and retrieve it as needed.

With this new technology, OpenAI continues to transform ChatGPT into an automated digital assistant that can compete with existing services like Apple’s Siri or Amazon’s Alexa.

Last year, the company allowed users to add instructions and personal preferences, such as details about their jobs or the size of their families, that the chatbot should consider during each conversation.

(The New York Times sued OpenAI and its partner, Microsoft, in December, for copyright infringement of news content related to A.I.

But creating and storing a separate list of personal memories that the chatbot can bring up in conversations could raise privacy concerns.

The original article contains 550 words, the summary contains 167 words. Saved 70%. I'm a bot and I'm open source!

So they are moving toward personal models then. Concidering how much data harvesting Google and Facebook have done over the years they should be the grand masters at personal llms