this post was submitted on 05 Oct 2024

983 points (97.9% liked)

Science Memes

14720 readers

2834 users here now

Welcome to c/science_memes @ Mander.xyz!

A place for majestic STEMLORD peacocking, as well as memes about the realities of working in a lab.

Rules

- Don't throw mud. Behave like an intellectual and remember the human.

- Keep it rooted (on topic).

- No spam.

- Infographics welcome, get schooled.

This is a science community. We use the Dawkins definition of meme.

Research Committee

Other Mander Communities

Science and Research

Biology and Life Sciences

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- !reptiles and [email protected]

Physical Sciences

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

Humanities and Social Sciences

Practical and Applied Sciences

- !exercise-and [email protected]

- [email protected]

- !self [email protected]

- [email protected]

- [email protected]

- [email protected]

Memes

Miscellaneous

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

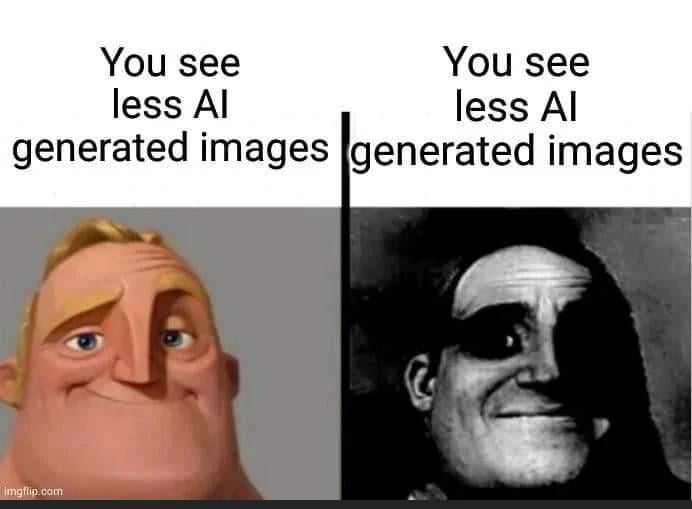

That's my issue with people saying stuff like "I can immediately tell when a picture is made with AI and I hate how they look"

Your assesment doesn't take into account all the false negatives. You have no idea how many pictures have tricked you already. By definition, the picture is badly made if you can immediately tell it's AI. That's a bit like seeing the most flamboyantly gay person on the street and thinking all gays look like that and you can always spot them while the closeted friend you're with flies perfectly under the radar.

Good old toupee fallacy.

I didn't know it had a name. Thanks!

I recently saw a photo on some website. It was from a Trump rally, and people had these freaky, ecstatic looks on their faces. Somebody commented that it looked like AI. Other people soon agreed; one of them remarked on the bizarre, "alien" hand on one of the babies in the crowd. That hand did look weird. There were too few fingers. It looked like a Teenage Mutant Ninja Turtle hand.

The problem was that this image was originally from a news story that was years prior to ChatGPT and the current AI boom. For this to be AI, the photographer would've had to have access to experimental software that was years away from being released to the public.

Sometimes people just look weird and, sometimes, they have weird hands, too.

While your point that sometimes people just have AI image associated traits is very salient, I worry you might not be considering the lengths these things will be used and why online discourse (in my worried opinion) is utterly fucked: The past ain't safe either.

For now we still have archive.org but without a third party/external source validating that old content...you can't be sure it's actually old content.

It's trivial to get LLMs to get image gen prompts done to "spice up those old news posts" at best (without remembering to tag the article edited/updated or bypassing that flag entirely)...and utterly fuck the very foundation of shared and accepted past reality not just presently but to anyone using the internet itself to look through the lens of the past at worst.

AI image generators have been around for a fairly long time. I remember deepfake discussion from about a decade ago. Not saying the image in discussion is though. I remember Alex Jones making conspiracy theories that revolved around Bush and lossy video compression artifacts too.

Reminds me of all the people who believe commercials and advertising doesn't work on them. Sure, that's why billions are spent on it. Because it doesn't even do anything. Oh it only works on all the other people?

That's why it is so hard to get that stuff regulated. People believe it doesn't work on them.

That's the real fear of AI. Not that it's stealing art jobs or whatever. But that all it takes is for a politician or business man to claim something is AI, no matter how corroborated it is and throw the whole investigation for a loop. It's not a thing now, because no one knows about advanced AI (except for internet bubbles) and it's still thought that you can easily differentiate images, but imagine even 5 years from, or 10.

A more timely example is the people who think they can always tell when someone is trans.

Many unedited or using old Ai images I can detect with one look. A few more I can find by looking for inconsistencies like hands or illogical items.

However I am sure there will be more AI generated images that may even be a little bit edited afterwards that I can't detect.

You will need an ai to detect them. Since at least in images ai is detectable by the way they create the files.

In AI-generated sound you can see it in the waveform, it has less random noise altogether and it seems like a huge, well, wave. I wonder if sth similar is true for images.

Basically yes, lack of detail, especially small things like hair or fingers. The texture/definition in AI images is usually less. Though, once again, depends on the technique being used.

I heard they managed to put some noise into ai generated audio, so it's even more difficult to tell it

It also doesn't help that they are working to improve it all the time.