this post was submitted on 30 Jul 2024

517 points (91.0% liked)

Firefox

17902 readers

28 users here now

A place to discuss the news and latest developments on the open-source browser Firefox

founded 4 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

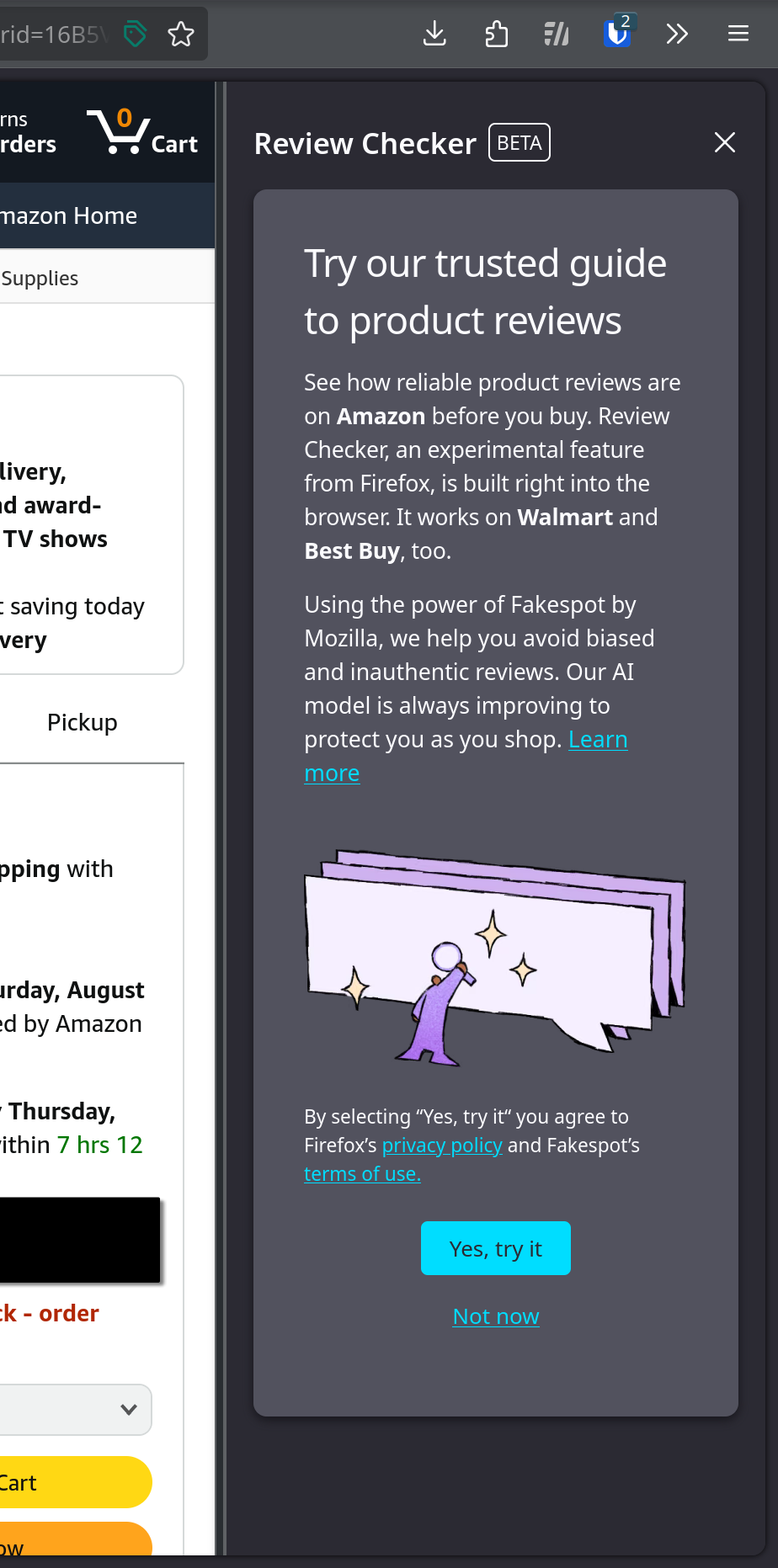

Cool it with the universal AI hate. There are many kinds of AI, detecting fake reviews is a totally reasonable and useful case.

I have large doubts on an AIs ability to reliably spot fakes.

AI: "This is definitely a fake review because I wrote it."

There are literal bots on Reddit with less complexity able to measure the likelihood of a story being reliable and truthful, with facts and fact checkers. They're not always right, they ARE useful though. Or were. Not sure about now, been over a year since I left.

Would you mind pointing me in the direction of those AIs since the newfangled factcheck bot seems to just pull its data from a premade database, so no AI here on Lemmy

If by reliably you mean 99% certainty of one particular review, yeah I wouldn't believe it either. 95% confidence interval of what proportion of a given page's reviews are bots, now that's plausible. If a human can tell if a review was botted you can certainly train a model to do so as well.

but it does not work. This stuff never does.

What do you mean by "this stuff?" Machine learning models are a fundamental part of spam prevention, have been for years. The concept is just flipping it around for use by the individual, not the platform.

bayesian filtering, yes. Llm's? no