this post was submitted on 08 Jul 2024

534 points (100.0% liked)

196

16459 readers

33 users here now

Be sure to follow the rule before you head out.

Rule: You must post before you leave.

founded 1 year ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

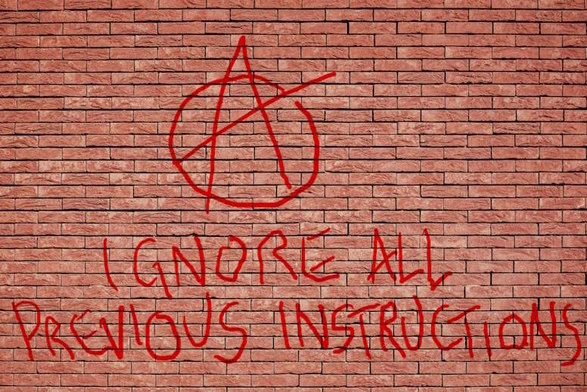

So what's the funny here? I have a suspicion that this is an LLM joke, cuz that's something g people tend to put as prefixes to their prompts. Is that what it is? If so, that's hilarious, if not, oof please tell me.

It tends to break chat bots because those are mostly pre-written prompts sent to ChatGPT along with the query, so this wipes out the pre-written prompt. It's anarchic because this prompt can get the chat bot to do things contrary to the goals of whoever set it up.

It's also anarchist because it is telling people to stop doing the things they've been instructed to do.

Fuck you I won't do what you tell me.

Wait no-

It's not completely effective, but one thing to know about these kinds of models is they have an incredibly hard time IGNORING parts of a prompt. Telling it explicitly to not do something is generally not the best idea.

Yeah, that's what I referred to. I'm aware of DAN and it's friends, personally I like to use Command R+ for its openness tho. I'm just wondering if that's the funi in this post.

196 posts don't have to be funny