this post was submitted on 12 Jun 2024

1323 points (98.7% liked)

Memes

45548 readers

2055 users here now

Rules:

- Be civil and nice.

- Try not to excessively repost, as a rule of thumb, wait at least 2 months to do it if you have to.

founded 5 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

Serious Question:

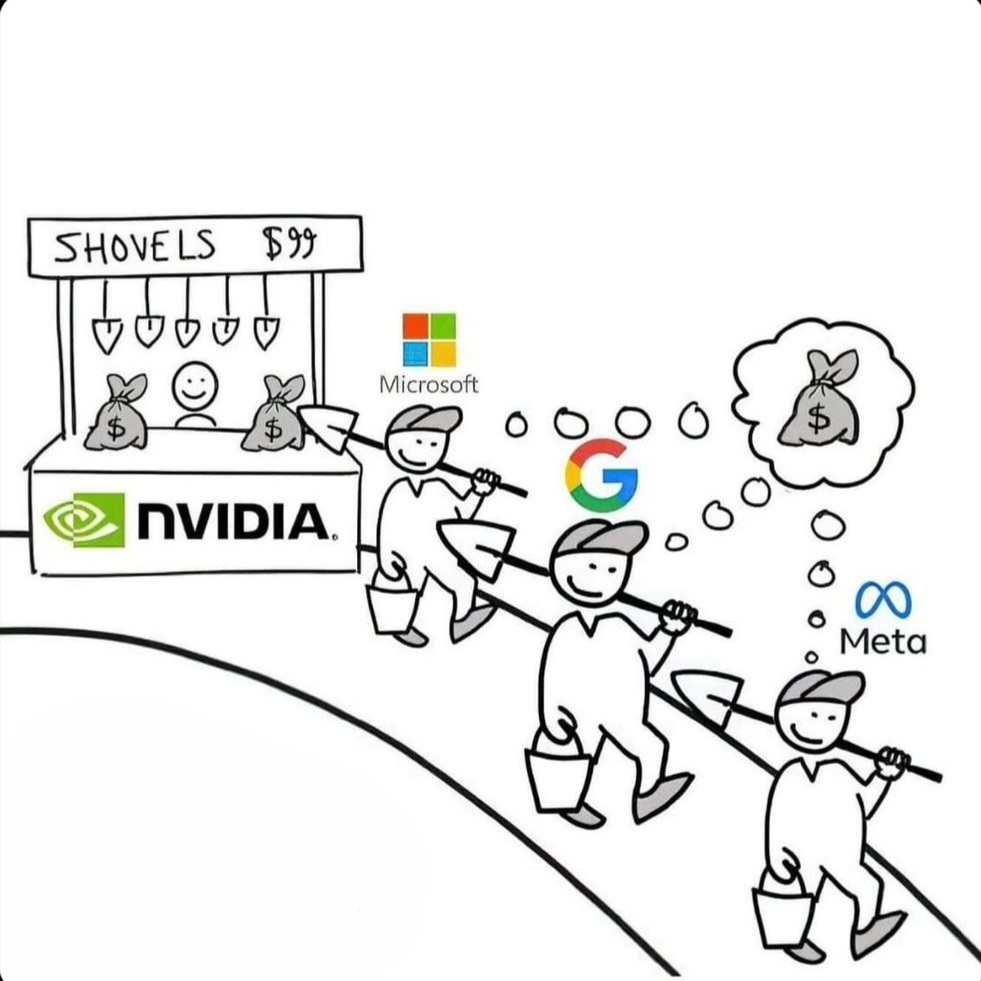

Why is Nvidia AI king and I see nothing of AMD for AI?

I'm an AI Developer.

TLDR: CUDA.

Getting ROCM to work properly is like herding cats.

You need a custom implementation for the specific operating system, the driver version must be locked and compatible, especially with a Workstation / WRX card, the Pro drivers are especially prone to breaking, you need the specific dependencies to be compiled for your variant of HIPBlas, or zLUDA, if that doesn't work, you need ONNX transition graphs, but then find out PyTorch doesn't support ONNX unless it's 1.2.0 which breaks another dependency of X-Transformers, which then breaks because the version of HIPBlas is incompatible with that older version of Python and ..

Inhales

And THEN MAYBE it'll work at 85% of the speed of CUDA. If it doesn't crash first due to an arbitrary error such as CUDA_UNIMPEMENTED_FUNCTION_HALF

You get the picture. On Nvidia, it's click, open, CUDA working? Yes?, done. You don't spend 120 hours fucking around and recompiling for your specific usecase.

Also, you need a supported card. I have a potato going by the name RX 5500, not on the supported list. I have the choice between three rocm versions:

#1 is what I'm actually using. I can deal with a random crash every other day to every other week or so.

It really would not take much work for them to have a fourth version: One that's not "supported-supported" but "we're making sure this things runs": Current rocm code, use kernels you write for other cards if they happen to work, generic code otherwise.

Seriously, rocm is making me consider Intel cards. Price/performance is decent, plenty of VRAM (at least for its class), and apparently their API support is actually great. I don't need cuda or rocm after all what I need is pytorch.

Simple Answer:

Cuda

So, AMD has started slapping the AI branding on to some of their products, but they haven’t leaned in to it quite as hard as Nvidia has. They’re still focusing on their core product line up and developing the actual advancements in chip design.

I think it's in the pipeline. AMD has bought Xilinx, which builds FPGAs and already had some AI specific cores in their processors. I believe they're developing that further and integrating it in their GPUs now.