Hi,

I have a Pi-Hole set up on my home network, which I access from anywhere through a SWAG reverse proxy at https://pihole.mydomain.org. I have set up a local DNS record in Pi-Hole to point mydomain.org to the local IP of the SWAG server.

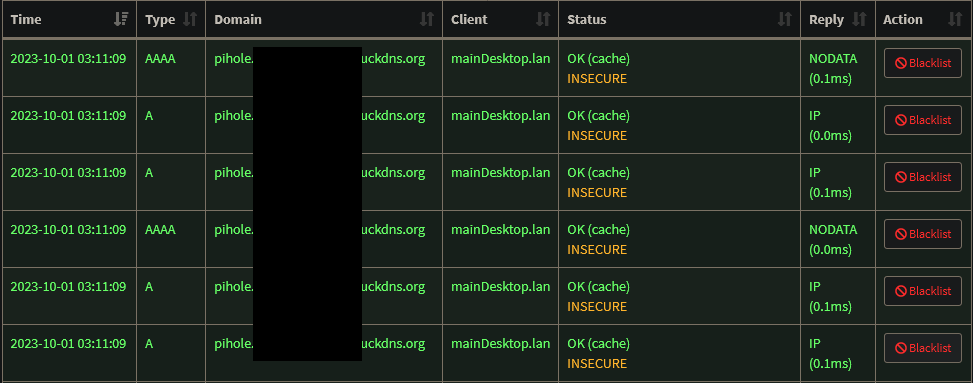

Access from anywhere (local or not) works well. It's just that when I am accessing some services (including the Pi-Hole) from my desktop through the reverse proxy via the DNS record (i.e. on the LAN), the Pi-Hole log gets completely spammed with requests like in the attached image. To be clear, I cropped the image, but it is pages and pages of the same. This is also the case for e.g. the qBittorrent Docker container I have set-up. So I guess it's for 'live' pages which update their stats continuously, which makes sense. But the Pi-Hole log is unusable in this state. This does not occur when I am accessing the services externally, through the same reverse proxy, or when I access them locally with their local IP.

The thing is, I have already selected Never forward non-FQDN A and AAAA queries in the Pi-Hole settings. I also have Never forward reverse lookups for private IP ranges, Use DNSSEC, and Allow only local requests, but they seem less relevant.

The Pi-Hole, SWAG server, and PC I am accessing them from are three different machines on my LAN.

Any way to filter out just those queries? I obviously want to preserve all the other legitimate queries coming from my desktop.

EDIT: Thanks for the responses. Unfortunately the problem persists, but I discovered something new. This only happens when accessing the page from Firefox desktop; not another desktop browser, and not Firefox Android. So actually it seems to be a Firefox problem, not a Pi-Hole one. I thought this might have something to do with Firefox's DNS-over-HTTPS, so I tried both adding an exception for my domain name, and disabling it altogether, but that didn't solve it..

Sounds like the DNS TTL (Time to Live) is set extremely low, preventing clients caching the record. Each time your browser makes a request (such as updating the graphs), it's submitting a new DNS query each time.

According to this post, this is intentional behaviour for PiHole to support situations where you change a domain from the block to allowed. The same post also references the necessary file modifications, should you wish to extend the TTL regardless.

The only downside you'll notice is a delay after whitlisting a domain, and it actually being unblocked. You'll need to wait for the TTL to expire. Setting it to something like 15 minutes would be a reasonable compromise.

That would be surprising; most HTTP clients reuse network connections and connections are deliberately kept open to reduce the overhead of reopening a connection (including latency in doing a DNS lookup).

Then again, I've seen worse ;)

Not that unusual depending on the software. A lot of them honour the TTL literally.

One enterprise software I know that does it is VMware vcenter. I'm sure there's plenty of consumer software that retries excessively.

It would make sense in this case, blocking via a proxy or firewall is a forced breaking of the link where this is just manipulating otherwise standard flows to accomplish something similar but they try to keep that feel where a change happens when you change it not after thing. If it was the pinhole setting the TTL though that should be the case for most any domain not just the duckdns.org one. Even with session reuse, modern sites pull things from all over, lord help you if you've ever had to selectively filter a site like CNN and the gazillion links it draws from.

That leads me to think it's actually the DDNS provider with the short TTL not it being cut down by the pinhole. When coming external the response comes from the public resolver and never gets logged to the pinhole, internally since the pi isn't the authority it sends a recursive downstream that gets logged.

A means of fixing it might be creating a separate internal domain (a .local or whatever non routable you like) and setting a static response for that where the pinhole is the authority. It should keep it from having to check from a place (duckdns) that by design is meant to change frequently so they probably do have a very low TTL.