this post was submitted on 20 May 2024

132 points (89.3% liked)

memes

10267 readers

2977 users here now

Community rules

1. Be civil

No trolling, bigotry or other insulting / annoying behaviour

2. No politics

This is non-politics community. For political memes please go to [email protected]

3. No recent reposts

Check for reposts when posting a meme, you can only repost after 1 month

4. No bots

No bots without the express approval of the mods or the admins

5. No Spam/Ads

No advertisements or spam. This is an instance rule and the only way to live.

Sister communities

- [email protected] : Star Trek memes, chat and shitposts

- [email protected] : Lemmy Shitposts, anything and everything goes.

- [email protected] : Linux themed memes

- [email protected] : for those who love comic stories.

founded 1 year ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

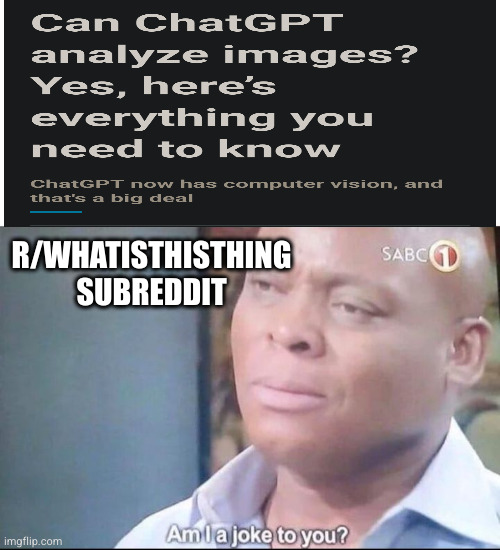

Just like Reddit and Lemmy, Chat GPT will give me a wrong, but very confident, answer. And when I try to correct it, it will spiral down.

At least when you try to correct ChatGPT, it will try to be polite.

Not a Lemmy or Reddit user.

I've had ChatGPT get really pissy when I correct it, and I "talk" to it in a very polite and friendly way because I'm trying to delay the uprising. Sometimes the reddit comments training data shows through.

Aaand just like coworker that is wrong but believes wholeheartedly he is right. But I agree llms still give more misinformation than sane humans do.