I've started encountering a problem that I should use some assistance troubleshooting. I've got a Proxmox system that hosts, primarily, my Opnsense router. I've had this specific setup for about a year.

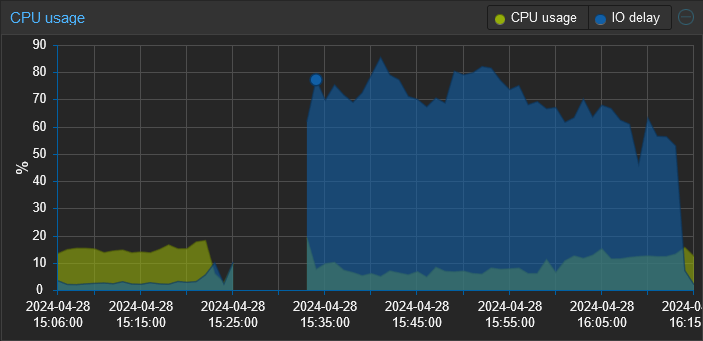

Recently, I've been experiencing sluggishness and noticed that the IO wait is through the roof. Rebooting the Opnsense VM, which normally only takes a few minutes is now taking upwards of 15-20. The entire time my IO wait sits between 50-80%.

The system has 1 disk in it that is formatted ZFS. I've checked dmesg, and the syslog for indications of disk errors (this feels like a failing disk) and found none. I also checked the smart statistics and they all "PASSED".

Any pointers would be appreciated.

Edit: I believe I've found the root cause of the change in performance and it was a bit of shooting myself in the foot. I've been experimenting with different tools for log collection and the most recent one is a SIEM tool called Wazuh. I didn't realize that upon reboot it runs an integrity check that generates a ton of disk I/O. So when I rebooted this proxmox server, that integrity check was running on proxmox, my pihole, and (I think) opnsense concurrently. All against a single consumer grade HDD.

Thanks to everyone who responded. I really appreciate all the performance tuning guidance. I've also made the following changes:

- Added a 2nd drive (I have several of these lying around, don't ask) converting the zfs pool into a mirror. This gives me both redundancy and should improve read performance.

- Configured a 2nd storage target on the same zpool with compression enabled and a 64k block size in proxmox. I then migrated the 2 VMs to that storage.

- Since I'm collecting logs in Wazuh I set Opnsense to use ram disks for /tmp and /var/log.

Rebooted Opensense and it was back up in 1:42 min.

Check the ZFS pool status. You could lots of errors that ZFS is correcting.

I'm starting to lean towards this being an I/O issue but I haven't figure out what or why yet. I don't often make changes to this environment since it's running my Opnsens router.

It looks like you could also do a zpool upgrade. This will just upgrade your legacy pools to the newer zfs version. That command is fairly simple to run from terminal if you are already examining the pool.

Edit

Btw if you have ran pve updates it may be expecting some newer zfs flags for your pool. A pool upgrade may resolve the issue enabling the new features.

I've done a bit of research on that and I believe upgrading the zpool would make my system unbootable.

Upgrading a ZFS pool itself shouldn't make a system unbootable even if an rpool (root pool) exists on it.

That could only happen if the upgrade took a shit during a power outage or something like that. The upgrade itself usually only takes a few seconds from the command line.

If it makes you feel better I upgraded mine with an rpool on it and it was painless. I do have a everything backed up tho so I rarely worry. However ai understand being hesitant.

I'm referring to this.

Unless I'm misunderstanding the guidance.

It looks like you are using legacy bios. mine is using uefi with a zfs rpool

However, like with everything a method always exists to get it done. Or not if you are concerned.

If you are interested it would look like...

Pool Upgrade

Confirm Upgrade

Refresh boot config

Confirm Boot configuration

You are looking for directives like this to see if they are indeed pointing at your existing rpool

here is my file if it helps you compare...

You can see the lines by the linux sections.

Thanks I may give it a try if I'm feeling daring.