HUMAN’s Satori Threat Intelligence and research team has uncovered and—in collaboration with Google, Trend Micro, Shadowserver, and other partners—partially disrupted a sprawling and complex cyberattack dubbed BADBOX 2.0. BADBOX 2.0 is a major adaptation and expansion of the Satori team’s 2023 BADBOX disclosure, and is the largest botnet made up of infected connected TV (CTV) devices ever uncovered. (BADBOX had a portion of its infrastructure taken down by the German government in December 2024.) The BADBOX 2.0 investigation reflects how the threat actors have shifted their targets and tactics following the BADBOX disruption in 2023.

This attack centered primarily on low-cost, ‘off-brand’ and uncertified Android Open Source Project devices with a backdoor. These backdoored devices allowed the threat actors the access to launch fraud schemes of several kinds, including the following:

- Residential proxy services: selling access to the device’s IP address without the user’s permission

- Ad fraud – hidden ad units: using built-in content apps to render hidden ads

- Ad fraud – hidden WebViews: launching hidden browser windows that navigate to a collection of game sites owned by the threat actors

- Click fraud: navigating an infected device to a low-quality domain and clicking on an ad present on the page

While HUMAN and its partners currently observe the threat actors pushing payloads to the device to implement these fraud schemes, the attackers are not limited to just these 4 types of fraud. These threat actors have the technical capability to push any functionality they want to the device by loading and executing an APK file of their choosing, or by requesting the device to execute code. For example, researchers at Trend Micro who collaborated on this investigation with HUMAN observed one of the threat actor groups (Lemon Group) deploying payloads to programmatically create accounts in online services, collect sensitive data from devices and more.

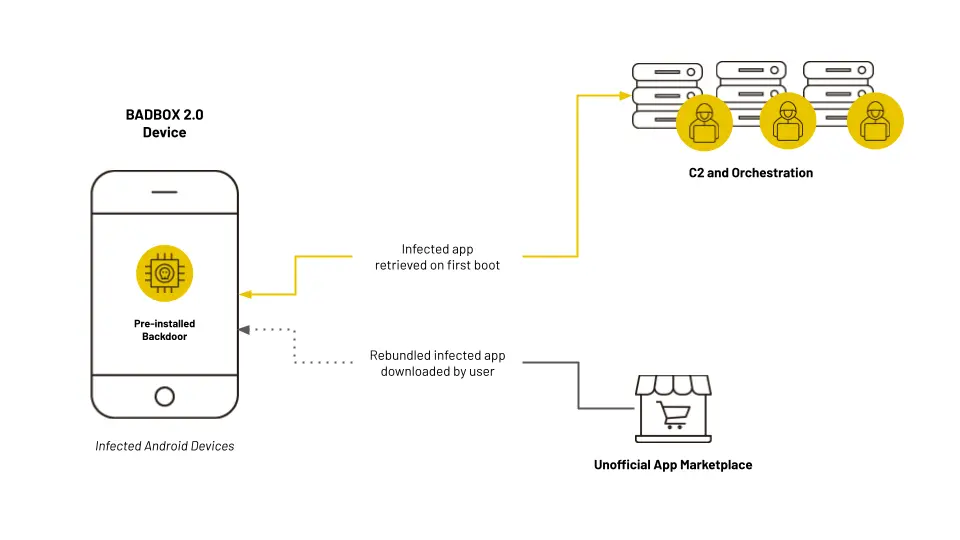

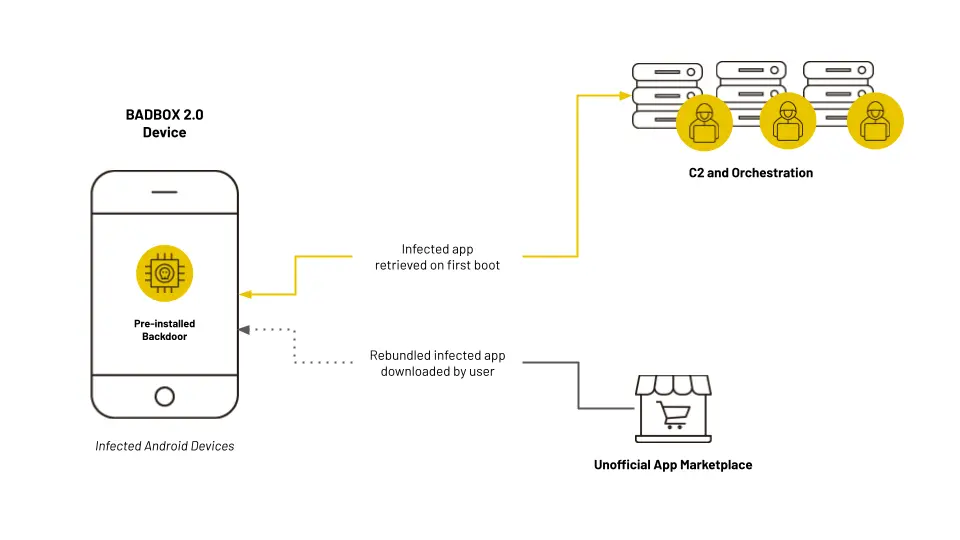

The backdoor underpinning the BADBOX 2.0 operation is distributed in three ways:

- pre-installed on the device, in a similar fashion to the primary BADBOX backdoor

- retrieved from a command-and-control (C2) server contacted by the device on first boot

- downloaded from third-party marketplaces by unsuspecting users

~Diagram outlining the three backdoor delivery mechanisms for BADBOX 2.0~

~Diagram outlining the three backdoor delivery mechanisms for BADBOX 2.0~

Satori researchers identified four threat actor groups involved in BADBOX 2.0:

- SalesTracker Group—so named by HUMAN for a module used by the group to monitor infected devices—is the group researchers believe is responsible for the BADBOX operation, and that staged and managed the C2 infrastructure for BADBOX 2.0.

- MoYu Group—so named by HUMAN based on the name of residential proxy services offered by the threat actors based on BADBOX 2.0-infected devices—developed the backdoor for BADBOX 2.0, coordinated the variants of that backdoor and the devices on which they would be installed, operated a botnet composed of a subset of BADBOX 2.0-infected devices, operated a click fraud campaign, and staged the capabilities to run a programmatic ad fraud campaign.

- Lemon Group, a threat actor group first reported by Trend Micro, is connected to the residential proxy services created through the BADBOX operation, and is connected to an ad fraud campaign across a network of HTML5 (H5) game websites using BADBOX 2.0-infected devices.

- LongTV is a brand run by a Malaysian internet and media company, which operates connected TV (CTV) devices, and develops apps for those devices and for other Android Open Source Project devices. Several LongTV-developed apps are responsible for an ad fraud campaign centered on hidden ads based on an “evil twin” technique as described by Satori researchers in the 2024 Konfety disclosure. (This technique centers on malicious apps distributed through non official channels representing themselves as similar benign apps distributed through official channels which share a package name.)

These groups were connected to one another through shared infrastructure (common C2 servers) and historical and current business ties.

Satori researchers discovered BADBOX 2.0 while monitoring the remaining BADBOX infrastructure for adaptation following its disruption; as a matter of course, Satori researchers keep an eye on threats long after they’re first disrupted. In the case of BADBOX 2.0, researchers had been watching the threat actors for more than a year between the first BADBOX disclosure and BADBOX 2.0.

Researchers found new C2 servers which hosted a list of APKs targeting Android Open Source Project devices similar to those impacted by BADBOX. Pulling on those threads led the researchers to find the various threats on each device.Through collaboration with Google, Trend Micro, Shadowserver, and other HUMAN partners, BADBOX 2.0 has been partially disrupted.

~Diagram outlining the three backdoor delivery mechanisms for BADBOX 2.0~

~Diagram outlining the three backdoor delivery mechanisms for BADBOX 2.0~