this post was submitted on 18 Sep 2023

843 points (98.1% liked)

Linux

54027 readers

1445 users here now

From Wikipedia, the free encyclopedia

Linux is a family of open source Unix-like operating systems based on the Linux kernel, an operating system kernel first released on September 17, 1991 by Linus Torvalds. Linux is typically packaged in a Linux distribution (or distro for short).

Distributions include the Linux kernel and supporting system software and libraries, many of which are provided by the GNU Project. Many Linux distributions use the word "Linux" in their name, but the Free Software Foundation uses the name GNU/Linux to emphasize the importance of GNU software, causing some controversy.

Rules

- Posts must be relevant to operating systems running the Linux kernel. GNU/Linux or otherwise.

- No misinformation

- No NSFW content

- No hate speech, bigotry, etc

Related Communities

Community icon by Alpár-Etele Méder, licensed under CC BY 3.0

founded 6 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

What do you use instead?

atool is a good alternative apparently, but I am still new to it

I use zip/unzip if I have the option

I avoid it and use zip or 7z if I can. But for some crazy reason some people stil insist on using that garbage tool and I have no idea why.

Are zip and 7z really that much easier?

I get that

tarneeds anffor no-longer-relevant reasons whereas other tools don't, but I never understood the meme about it beyond that. Iscfor "create" really that much worse thanafor "add"?7z xto extract makes sense.unzipeven more. No need for crazy mnemonics or colorful explanation images. It's complete nonsense that people are ok with that.If you want to do more than just "pack this directory up just as it is" you'll pretty quickly get to the limits of zip. tar is way more flexible about selecting partial contents and transformation on packing or extraction.

100% of tarballs that I had to deal with were instances of "pack this directory up just as it is" because it is usually people distributing source code who insist on using tarballs.

Because everyone else does, and if everyone else does, then I must, and if I do, then everyone else must, and then everyone else does.

Repeat loop.

For all I care it goes on the same garbage dump as LaTeX.

I think that's pretty mean towards the free software developers spending their spare time on Latex and the GNU utils.

I and many academics use Latex, and I personally am very happy to be able to use something which is plain text and FLOSS.

I also don't see your problems with tar; it does one thing and it does it good enough.

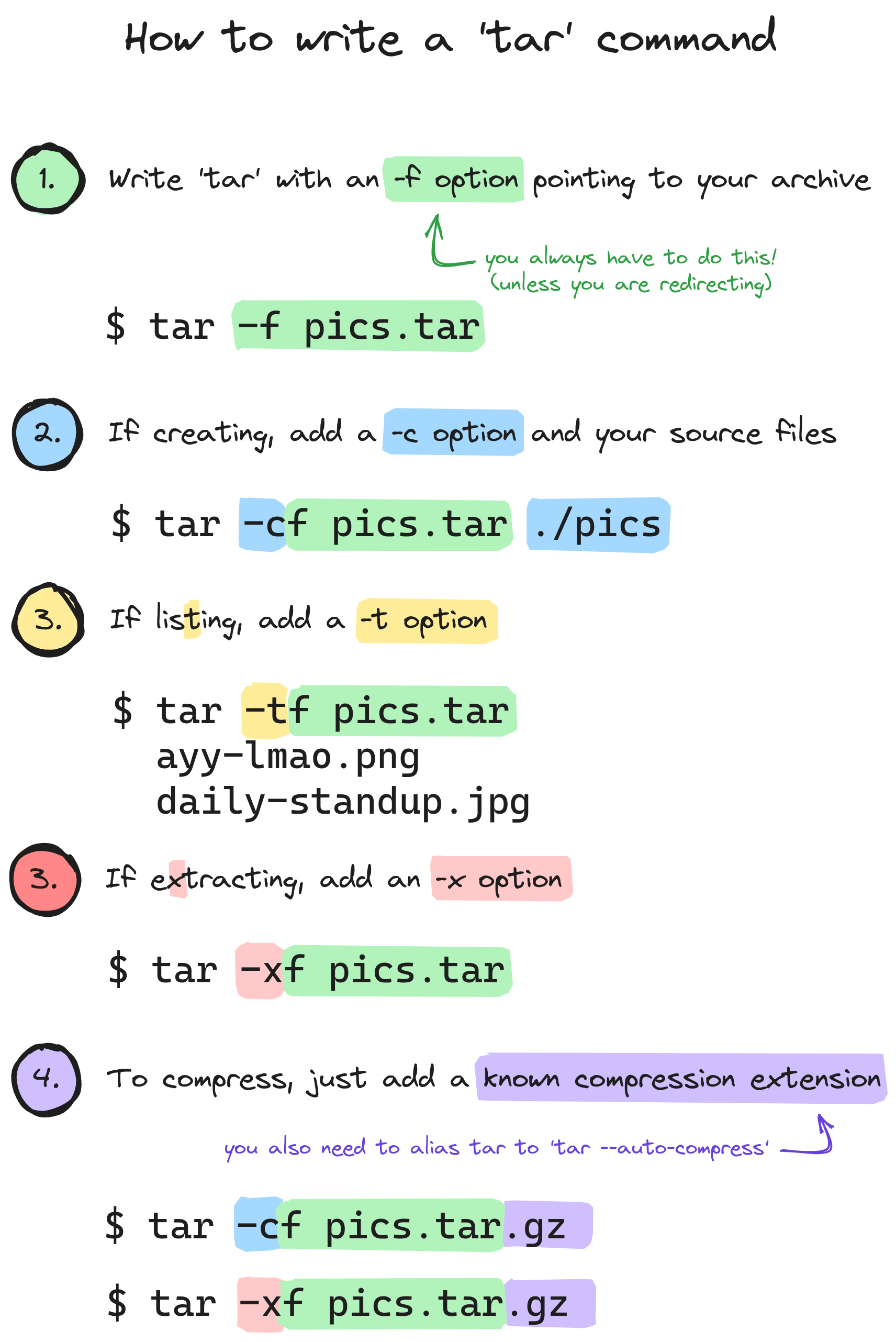

The problem is the usage of the tool which people invent different mnemonics for because it's UX is stuck in 1986 and the only people who remember the parameters are those who use it daily.

Similar thing for LaTeX: it's so absurdly crusty and painful to work with it's only used by people who have no alternative.

//ETA

Also, I don't want to be mean towards the maintainers of LaTeX. I'm sorry if I made any LaTeX maintainer reading this upset or feel inferior. Working on the LaTeX code is surely no easy endeavour and people who still do that in 2023 deserve a good amount of respect.

But everytime I had to work with LaTeX or any of its wrappers was just pure frustration at the usage and the whole project. The absolute chaos of different distributions, templates, classes and whatnot is something I never want to experience again.

speaking of which, you might want to check out typst if you haven't heard of it - I really hope this replaces most uses of LaTeX in the next years.

Thanks I'll keep an eye on that project. I did try pandoc and LyX in the past to ease the pain but typst appears to have the courage to finally let LaTeX be and not build a new wrapper around it.

You do you. Compression is waste of time; storage is cheap in that you can get more, but time? Time, you never get back.

Yes, and I'd rather not have my time wasted by waiting on thousands of small files transfer, rather than just compressing it and the time spent of one file transferring being much smaller.

Tar achieves the same effect without time to compress and decompress.

as in time wasted transferring a highly compressible file that you didn't bother compressing first?

it's only a waste of time when the file format is already compressed.

Unless you measure your baud in dial up modem, it often can take longer to compress / transport / uncompress than just transfer directly.

unless you're picking a slow compressor that's not true at all

Add a zero to your link speed - 10Gbs