this post was submitted on 05 Feb 2024

667 points (87.9% liked)

Memes

50658 readers

572 users here now

Rules:

- Be civil and nice.

- Try not to excessively repost, as a rule of thumb, wait at least 2 months to do it if you have to.

founded 6 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

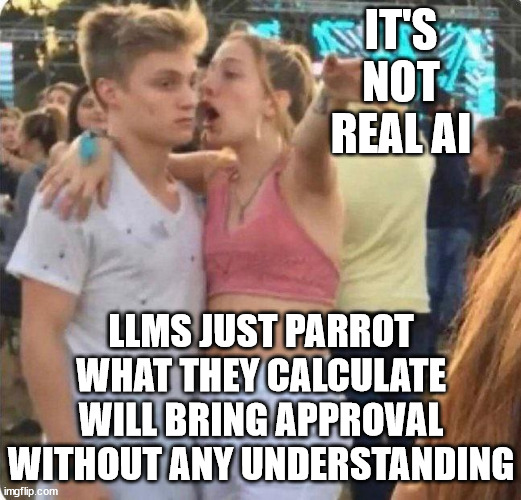

The way I've come to understand it is that LLMs are intelligent in the same way your subconscious is intelligent.

It works off of kneejerk "this feels right" logic, that's why images look like dreams, realistic until you examine further.

We all have a kneejerk responses to situations and questions, but the difference is we filter that through our conscious mind, to apply long-term thinking and our own choices into the mix.

LLMs just keep getting better at the "this feels right" stage, which is why completely novel or niche situations can still trip it up; because it hasn't developed enough "reflexes" for that problem yet.

LLMs are intelligent in the same way books are intelligent. What makes LLMs really cool is that instead of searching at the book or page granularity, it searches at the word granularity. It's not thinking, but all the thinking was done for it already by humans who encoded their intelligence into words. It's still incredibly powerful, at it's best it could make it so no task ever needs to be performed by a human twice which would have immense efficiency gains for anything information based.

ah, yes, prejudice

They also reason which is really wierd