this post was submitted on 05 Feb 2024

667 points (87.9% liked)

Memes

50769 readers

432 users here now

Rules:

- Be civil and nice.

- Try not to excessively repost, as a rule of thumb, wait at least 2 months to do it if you have to.

founded 6 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

That's a good way of explaining it. I suppose you're using a stricter definition of summary than I was.

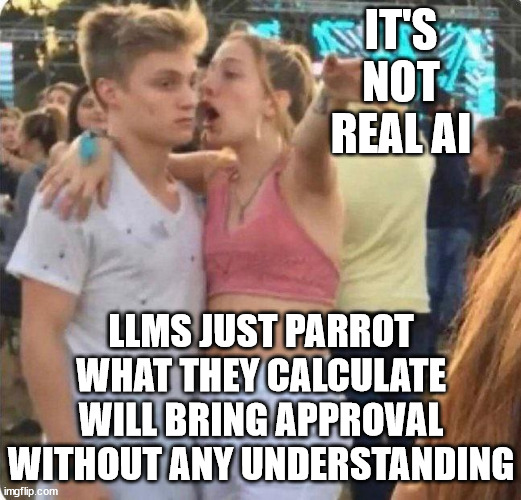

I think it's really important to keep in mind the separation between doing a task and producing something which looks like the output of a task when talking about these things. The reason being that their output is tremendously convincing regardless of its accuracy, and given that writing text is something we only see human minds do it's so easy to ascribe intent behind the emission of the model that we have no reason to believe is there.

Amazingly it turns out that often merely producing something which looks like the output of a task apparently accidentally accomplishes the task on the way. I have no idea why merely predicting the next plausible word can mean that the model emits something similar to what I would write down if I tried to summarise an article! That's fascinating! but because it isn't actually setting out to do that there's no guarantee it did that and if I don't check the output will be indistinguishable to me because that's what the models are built to do above all else.

So I think that's why we to keep them in closed loops with person -> model -> person, and explaining why and intuiting if a particularly application is potentially dangerous or not is hard if we don't maintain a clear separation between the different processes driving human vs llm text output.

Yeah this is basically how I think of them too. It's fascinating that it works.

You are so extremely outdated in your understanding, For one that attacks others for not implementing their own llm

They are so far beyond the point you are discussing atm. Look at autogen and memgpt approaches, the way agent networks can solve and develop way beyond that point we were years ago.

It really does not matter if you implement your own llm

Then stay out of the loop for half a year

It turned out that it's quite useless to debate the parrot catchphrase, because all intelligence is parroting

It's just not useful to pretend they only "guess" what a summary of an article is

They don't. It's not how they work and you should know that if you made one

They uh, still do the same thing fundamentally

Altman isn't gonna let you blow him dude

No they don't, and your idiotic personal attacks won't change how the tech works. You are just wrong. I don't love them, I don't care about Altman. I was just trying to tell you you are spreading misinformation. But nah defensive slurs it is